New Features

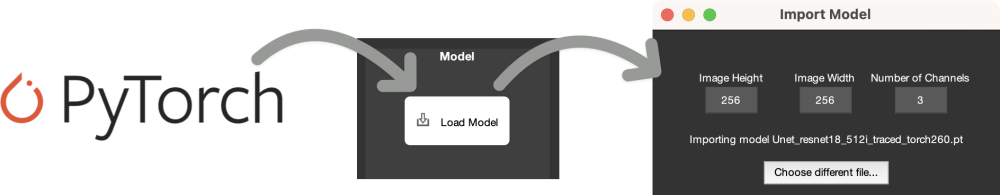

Import PyTorch Deep Learning Models

![]()

You can now load externally trained PyTorch segmentation models directly into MIPAR. This allows users to integrate custom-developed models into their MIPAR recipes and workflows, expanding the range of available Deep Learning solutions and extending the capabilities of these external models by connecting them to the rest of MIPAR’s detection and analysis automation capabilities.

For detailed information, see PyTorch Model Import.

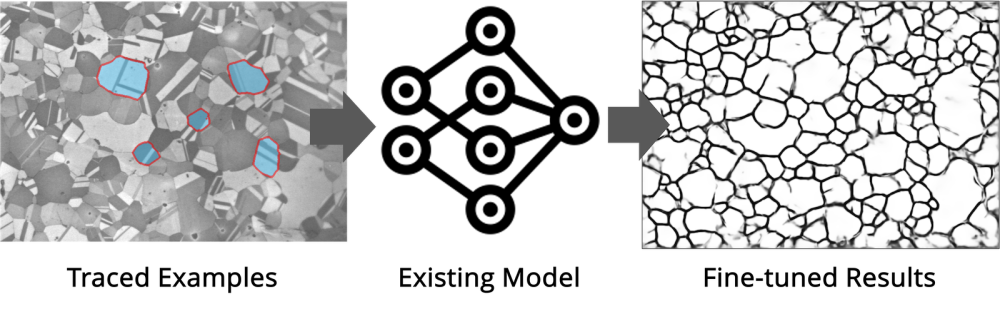

Better Model Updating (Fine-tuning)

![]()

The engine for updating Deep Learning models (also known as fine-tuning) has been dramatically enhanced. When updating an existing model, MIPAR now preserves more learned parameters if training layers (classes) are shared between the original model and those defined in the new data. This leads to better results and faster convergence when updating models with preserved classes.

For detailed information, see Updating Models.

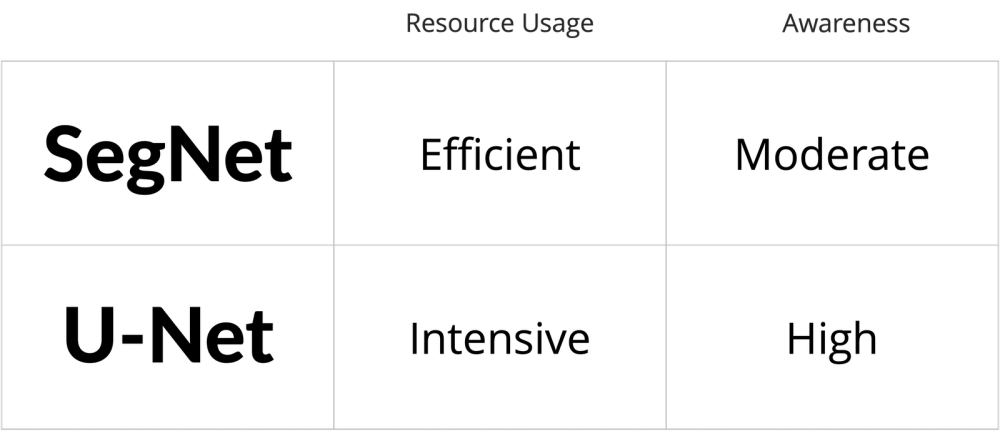

Train and Run U-Nets

![]()

MIPAR now supports training and applying U-Net models, a powerful Deep Learning architecture. Users can select U-Net as the model type within the AI Session Processor’s Advanced Settings window.

Up until this release, MIPAR’s Deep Learning capabilities were centered around the SegNet architecture. This architecture was chosen for its balance between resource efficiency and training performance. This remains the default model type and is recommended for most problems.

As MIPAR continues to grow, we recognized the need to incorporate alternative architectures. The U-Net architecture is now available, offering users a powerful option specifically known for its effectiveness in complex segmentation tasks.

While U-Net models can be more resource-intensive during training and application compared to SegNet, they often excel at learning intricate spatial hierarchies and can achieve superior results on highly complex segmentation and classification problems.

Trained U-Net models can be used in any recipe step where a SegNet model was previously applicable. Both SegNet and U-Net models are saved using the same native .dlm file extension; the file simply stores whichever model type was selected prior to training.

Improvements

Base Package

- Introduced a

"per-feature"option in custom measurement formulas that allows expressions such as:

MIN(feature_length_x, feature_length_y, "per-feature")

to return an elementwise minimum between the feature X size and feature Y size. Alternatively, not passing the"per-feature"flag returns the minimum across all features. - Improved the custom formula parser to handle more complex formulas and improve error checking to reduce the risk of an invalid formula execution.

- Color by Measure and Local Measure now include original image filename as a prefix in default names for exported data.

Deep Learning Extension

- The Apply Model interface has been redesigned to enhance the user experience, maximizing image space while providing a more intuitive flow for adjusting settings.

Bug Fixes

Base Package

- Fixed Color By Measure report generation when loading a pre-v5.0 recipe.

- Fixed Spotlight custom prompts when the entire image is selected in the companion.

- The Session Processor will reload the available custom image measurements if the available measurements were edited in the Image Processor.

- Fixed a bug in the Report Generator where the report could fail in some edge cases when Random Intercepts did not result in a value.

- Fixed bug when checking if a recipe ends in a grayscale step when a Set Companion Image or Set Memory Image step is encountered before a decision can be made.

Deep Learning Extension

- Disabled the Preprocessing Recipe dropdown in the Call Output recipe step window.

Checkpoint

- Fixed bug that would cause the Project Overview to consider recipes different if they have recipe steps that auto-compute a parameter.

Need more help with this?

Chat with an expert now ››