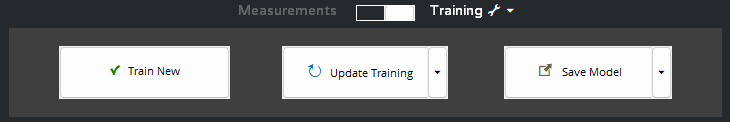

Below is labeled screenshot of the AI Session Trainer which reveals the layout of and purpose behind each user interface element.

Layout

Train New

Train new deep learning model using the reference and BW images and the Training Settings

Update Model

Update deep learning model with new images/layers in the session.

Save/Apply Model

Save the trained model/apply the model in the image processor to the reference image in focus.

Training Settings

1. Tiles

Grid spacing to split each image into tiles prior to training. This reduces memory load on the GPU and provides more data to the training algorithm, which tends to produce better results. (Example: Tiles = 3×2 splits each image into 6 sub-images)

2. Size Factor

Resize factor for training images (0-1). This reduces GPU memory load and significantly speeds up training times. A factor down to 0.5 can often be used and still provide adequate precision.

3. Epochs

Number of epochs to run training for. The below table offers some recommendations based on your amount of training data:

| Number of Training Sub-images | Epochs |

|---|---|

| < 200 | 500-700 |

| 200-500 | 300-500 |

| 500-1000 | 100-300 |

| > 1000 | 50-100 |

4. Processor

- GPU: Train model on single GPU (strongly recommended — see Deep Learning System Requirements for more information). Active GPU is set in “GPU Computation” panel in File > Preferences.

- CPU: Train model on the CPU

- Multi-GPU: Train model on multiple GPUs on your local system (only available when more than 1 GPU is detected)

Need more help with this?

Chat with an expert now ››