PyTorch Deep Learning models may be used in MIPAR recipes just like native MIPAR models. To process an image with a PyTorch model, select the .pt file from the Apply Model window as you would a native MIPAR .dlm file. Then, the “Import Model” window will open, allowing the user to provide input image parameters that PyTorch doesn’t typically retain and to verify the model can be imported without error. From there, the process of applying the model to the image is the same as with a native MIPAR model.

Activating a PyTorch Model

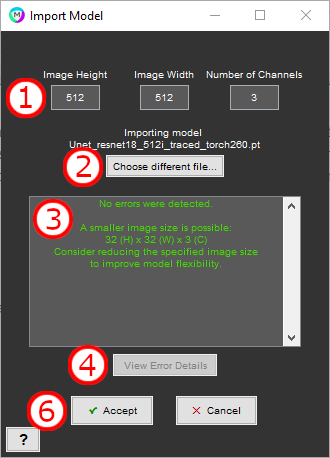

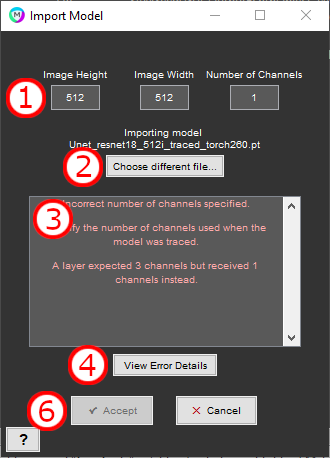

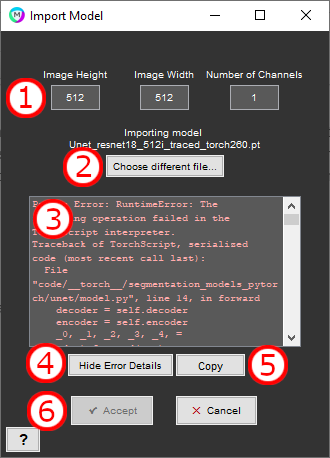

After selecting a .pt file from the Apply Model window, MIPAR will attempt to import the model and display the outcome in the Import Model window. If import is unsuccessful, the error message will be displayed, and another model file can be selected instead. If import is successful, the user can enter parameters which characterize the image the model expects. If the provided combination of parameters combination results in an error, this error will be displayed. If the provided combination of parameters does not result in an error, this will be displayed and the user will be able to “Accept” the model activation.

Import Model Window

1. Image height, width, and channels

The size of image the model is specified to accept. We recommend starting with the values used to trace the model.

2. Load model

Select a different model file (.pt) for import.

3. Message box

Displays information about the import process. Errors from model load or image size specification will be displayed here.

4. View / Hide error details

Shows the full text of any PyTorch errors from attempting to load the model or apply the model to placeholder data.

5. Copy

Copy to the clipboard the full text of any PyTorch errors, or the trace_and_save Python function (see below) if the loaded model is untraced.

6. Accept and Cancel

Accept to complete the import process and Cancel to abort. Both options then return to the Apply Model window.

Model Requirements

To be usable in MIPAR, a PyTorch model must satisfy several requirements:

- The model must derive from PyTorch’s

torch.nn.Moduleclass. - The expected input must be a floating-point (i.e.

torch.float) tensor. MIPAR will rescale input images to the range 0.0-1.0 before handing them to the model. No normalization (e.g. Z-score or Min-Max) will be applied by MIPAR; the model must perform any desired normalization itself. - The expected output must be a floating-point (i.e.

torch.float) tensor. MIPAR will expects output probabilities for each class to range between 0.0-1.0. Values outside this range will be clipped. Any desired normalization (e.g. softmax) is assumed to be performed by the model. - The expected input must be

B x C x H x Wtensor, with either 1 or 3 channelsC. The model must be able to accept inputs with batch sizeB1. - The expected output must be a

B x C x H x Wtensor. Output batch sizeBand image dimensionsH x Wmust match the input tensor. Output channelsCwill correspond to the classes of features to be identified. - The model should accept tensors residing on either the CPU (i.e.

torch.device('cpu')) or the GPU (e.g.torch.device('cuda:0')). - The model must be traced and saved with the torch.jit package.

The following Python function can be used to perform the trace and save operations, as well as verify the input and output requirements:

import torch

def trace_and_save_model(model: torch.nn.Module, n_channels: int, image_height: int, image_width: int, save_name: str):

assert n_channels == 1 or n_channels == 3

example = torch.rand(1, n_channels, image_height, image_width)

device = torch.device("cpu")

example.to(device)

model.to(device)

traced = torch.jit.trace(model, example)

output = traced(example)

assert torch.is_floating_point(output)

assert output.shape[0] == example.shape[0]

assert output.shape[2] == example.shape[2]

assert output.shape[3] == example.shape[3]

if torch.cuda.is_available():

device = torch.device("cuda:0")

example = example.to(device)

traced.to(device)

_ = traced(example)

traced.save(save_name + ".pt")

p.

Need more help with this?

Chat with an expert now ››