MIPAR allows you to update, or fine-tune, existing Deep Learning models (.dlm files) using new training data. Fine-tuning is the process of taking a pre-trained model (one that has already learned patterns from a previous dataset) and training it further on a new, often smaller or more specific, dataset. This approach leverages the knowledge already captured by the model, often leading to faster training times, requiring less new data, and achieving high accuracy even with limited new annotations.

Why Update a Model?

Instead of training a completely new model from scratch, fine-tuning offers several advantages:

1. Leverages Prior Learning: The existing model has already learned fundamental features and patterns from its original training data. Fine-tuning builds upon this foundation, rather than starting from zero.

2. Faster Training: Since the model starts with learned parameters, it often converges to a good solution much faster than training a model from scratch.

3. Reduced Data Requirement: Fine-tuning typically requires significantly less new training data compared to training a new model, as you are primarily adjusting the existing knowledge to the nuances of the new data.

4. Specialization: You can adapt a general-purpose model (e.g., one trained on various particle types) to a highly specific task (e.g., identifying only one specific type of particle under unique imaging conditions) more effectively.

5. Improved Parameter Preservation: MIPAR’s updating process preserves more learned parameters when training layers (classes) are shared between the old model and the new training, making the update more effective and efficient.

When to Update vs. Train a New Model

Choosing between updating an existing model and training a new one depends on your specific goals and data:

Consider updating if:

- Your new task is similar to the task the original model was trained for (e.g., segmenting particles in slightly different microscopy images).

- You have a limited amount of new annotated data.

- You want to incrementally improve an existing model’s performance on new examples it previously struggled with.

- You want to extend a model’s capability to handle variations not present in the original training set (e.g., different lighting, new artifacts).

- You are satisfied with the original model’s core parameters (Model Depth, Filter Size, Model Type – SegNet or U-Net).

Consider training a new model if:

- Your new task is drastically different from the original model’s task.

- You have a large amount of new annotated data sufficient for training from scratch.

- You want to experiment with a different model parameters (e.g., switching between SegNet and U-Net, changing depth and filter size – you cannot change these model parameters during an update process).

- The original model performs very poorly, and you suspect starting fresh might yield better results.

- You do not have access to the original .dlm file you wish to update.

The Updating Process (Step-by-Step)

Follow these steps to update an existing Deep Learning model in MIPAR:

1. Prepare the Training Session:

- Start a new MIPAR session by launching the AI Session Processor

- If you identified images needing improvement from a batch process, open the relevant session (.ssn or .ssn2 file) which contains the images and potentially initial (incorrect) segmentations.

2. Add/Refine Training Data:

- Load the images you want to use for updating the model into the session, if they are not already present.

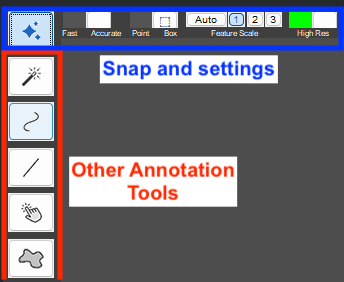

- Add or correct annotations for these images. This involves using MIPAR’s annotation tools to create accurate ground truth segmentations that the model will learn from. Ensure your annotations accurately represent the desired output for these specific images. Start small and add more annotations if needed. We strongly recommend using Snap if available for optimal annotation speed and precision.

- See example in Tracing in AI Session Processor

3. Select the Model to Update:

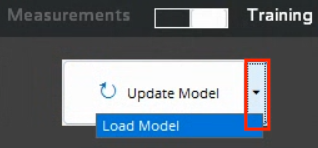

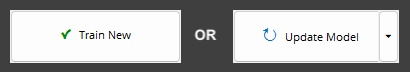

- Click the dropdown arrow located immediately to the right of the Update Model button.

- Select Load Model.

- Browse to and select the existing .dlm file you wish to fine-tune.

- NOTE: .dlm files can be saved out of Apply Model recipe steps. Edit the step, and click “Save” in the lower right corner of the top panel. This allows you to update models which you received in a recipe, even if you did not train the model yourself.

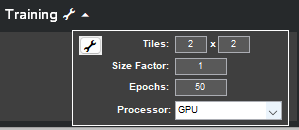

4. Review/Adjust Training Settings:

- Once the model is loaded, its name will appear in the Properties panel.

- You can optionally expand the training settings, and access Advanced Settings to review and adjust some training parameters.

- NOTE: The settings on the Model Parameters tab within the Advanced Settings window (Model Depth, Filter Size, and Model Type) are ignored when updating. The architecture is determined entirely by the .dlm file you loaded in Step 3 and cannot be changed during the update process. The model will retain the model parameters (e.g., SegNet or U-Net, depth, filter size) it was originally trained with.

5. Initiate the Update:

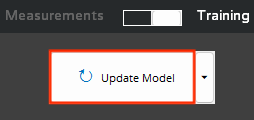

- Click the Update Model button.

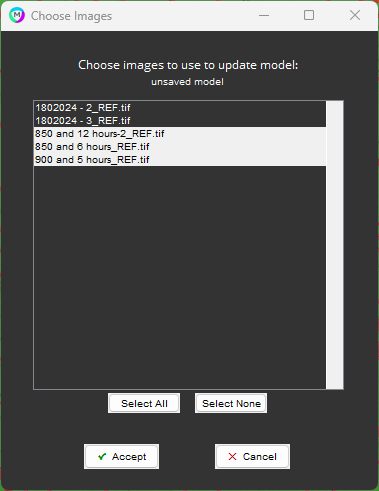

6. Select Images for Update:

- A dialog box will appear, listing all images currently loaded in the session.

- Click and drag within the list, use Shift+click, or the Select All/None buttons to choose the images you want to use specifically for model updating. Typically, these will be the new images you added or the ones where you corrected annotations.

- Click Accept.

7. Save Updated Model:

- Once updating is complete, click Save Model to export the updated model. It is highly recommended to save it with a new name to avoid overwriting your original pre-trained model.

See Applying for information on applying the model.

Need more help with this?

Chat with an expert now ››