AI changes the cybersecurity threat landscape

Using any new technology brings about new inherent risks…in the case of AI, maybe more so. While AI presents opportunities, it also introduces unique risks and compliance challenges that demand attention. Excitement about AI, like all new systems, has the potential to relegate critical security and assurance considerations to afterthoughts. Managing the security risks of AI systems is critical, as failing to do so can have severe consequences.

How are AI systems similar to what we already know?

In many respects, systems leveraging AI models are similar to the IT systems we’ve been deploying for years. Both run on familiar infrastructure and services with known risks and security patterns. For both, security of the infrastructure provides the needed foundation. Data access, data governance, and data control yield outsized benefits for both, and in both the greatest risk follows the sensitive data. Because AI systems are still IT systems, organizations need to apply conventional IT security controls to these systems.

How are AI systems different, from a security perspective?

The deployment of AI imposes novel security threats while exacerbating others, requiring additional cybersecurity measures that are not comprehensively addressed by current risk frameworks and approaches (including the HITRUST CSF up to this point). Compared to traditional software, AI-specific security risks that are new or increased include the following:

- Issues in the data used to train AI models can bring about unwanted outcomes, as intentional or unintentional changes to AI training data has the potential to fundamentally alter AI system performance.

- AI models and their associated configurations (such as the metaprompt) are a high-value target to attackers who are discovering new and difficult to detect approaches to breach AI systems.

- Modern AI deployments rely on third party service providers to an even greater degree, making supply chain risks such as software and data supply chain poisoning a very real threat.

- AI systems may require more frequent maintenance and triggers for conducting corrective maintenance due to changes in the threat landscape and data, model, or concept drift.

Further, generative AI systems face even more unique security threats, including the following:

- GenAI systems have random and unexpected output by design, which is often completely inaccurate. Pairing this with the confidence in which genAI systems communicate their responses leads to a heightened risk of overreliance on inaccurate output.

- The randomness of genAI system outputs challenges long-standing approaches to software quality assurance testing.

- Because the foundational models that underpin genAI are commonly trained on data sourced from the open Internet, these systems may produce output which is offensive or fundamentally similar to copyrighted works.

- Because genAI models are often tuned or augmented with sensitive information, genAI output has the potential to inappropriately disclose this sensitive data to users of the AI system.

- Organizations are actively giving genAI systems access to additional capabilities through language model tools such as agents and plugins, which (a) may give rise to excessive agency and (b) extends the system’s security and compliance boundary.

How is HITRUST helping address this need?

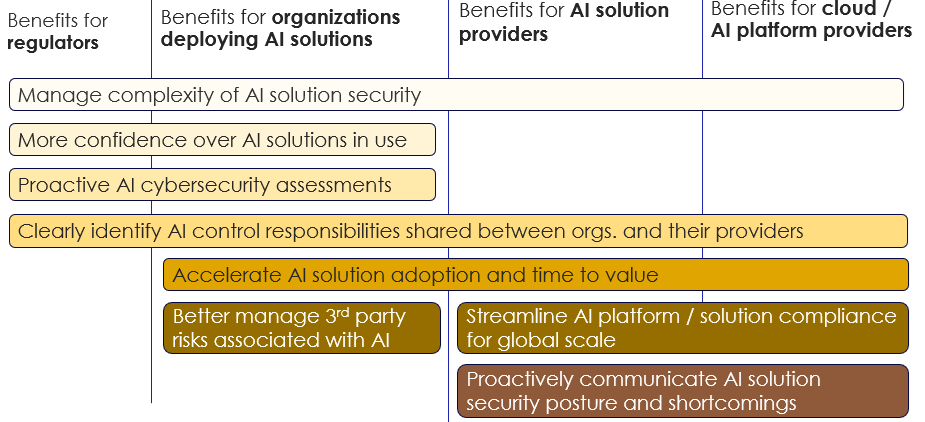

Organizations must address the cybersecurity of AI systems by (1) extending existing IT security practices and (2) proactively addressing AI security specificities through new IT security practices. The HITRUST AI Cybersecurity Certification equips organizations to do this effectively through providing prescriptive and relevant AI security controls, a means to assess those controls, and reliable reporting that can be shared with internal and external stakeholders.