The following examples outline how to use the HITRUST scoring rubric to identify the appropriate Policy, Procedure, and Implemented strength and coverage over a number of scenarios. Before reading the examples, please review Chapter 9: Control Maturity Levels and the HITRUST CSF Control Maturity Scoring Rubric.

For examples pertaining to the Measured and Managed maturity levels, see A-7: Rubric Scoring – Measured and Managed.

Example #1

| Scenario BUID: 0410.01×1system.12 | CVID:0271.0 If it is determined that encryption is not reasonable and appropriate, the organization documents its 1. rationale, and 2. acceptance of risk. |

Evaluation

Policy:

A “Mobile Device Policies and Procedures” document states, “All PHI, PII, and sensitive data shall be encrypted,” and also states, “All mobile devices, laptops, smartphones, and other portable media shall be encrypted.”

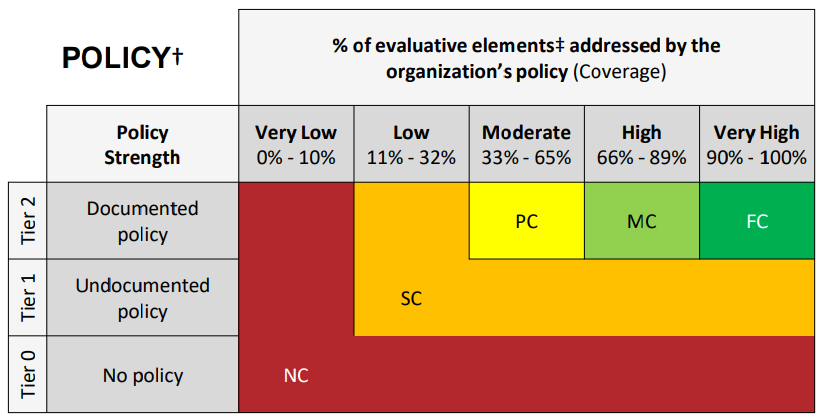

Policy Strength: In this scenario, a documented mobile device policy exists (see Chapter 9.1 Policy Maturity Level) so the Policy strength appears to be “Tier 2 – Documented Policy”.

Policy Coverage: While the policy does talk about encrypting mobile devices, it does not address the documentation of A) rationale and B) acceptance of risk, when the organization deems that encryption is not reasonable and appropriate. Therefore, the Policy coverage is 0/2 = 0% or “Very Low”.

Policy Score: Use the strength and coverage to determine the final Policy score according to the rubric. “Tier 2” strength and “Very Low” coverage indicate a score of Non-Compliant or 0%.

Alternative Approach – Once it is determined that the Mobile Device Policies and Procedures document does not address this requirement statement’s evaluative elements, the External Assessor may opt to investigate whether an undocumented policy exists (see Chapter 9.1 Policy Maturity Level). In this scenario, the implementation testing described below indicates that there is not an undocumented policy that is consistently observed. This alternative approach therefore results in a Policy strength of “Tier 0 – No Policy”, which always indicates a score of Non-Compliant or 0%. Note that both approaches result in the same Policy score.

Procedure:

The Mobile Device Policies and Procedures document states, “All PHI, PII, and sensitive data shall be encrypted,” and also states, “All mobile devices, laptops, smartphones, and other portable media shall be encrypted.”

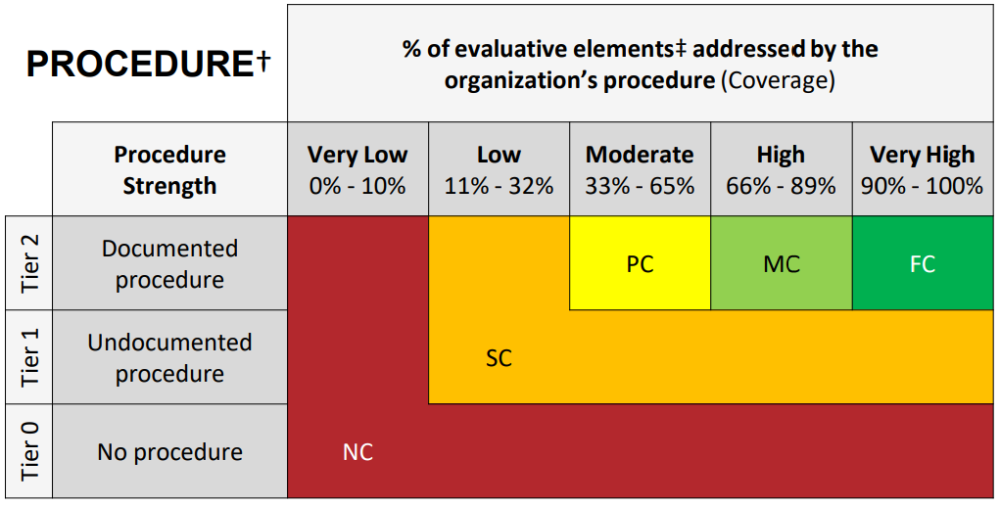

Procedure Strength: To determine the appropriate Procedure score, first identify the Procedure strength. The “Mobile Device Policies and Procedures” document doesn’t actually contain any procedures (despite the name) as it does not describe the operational aspects of how to perform the requirements. Therefore, a documented procedure does not exist and the External Assessor may investigate whether an undocumented procedure exists (see Chapter 9.2 Procedure Maturity Level).

The External Assessor’s inquiries of various members of the workforce showed that no consensus exists on what to do if it is determined encrypting a mobile device is not reasonable and appropriate. Further, the implementation testing described below confirms that there are no undocumented procedures that are consistently observed. Therefore, Procedure strength is “Tier 0 – No Procedures”.

Procedure Coverage: Since Procedure strength is “Tier 0”, there is no need to determine Procedure coverage.

Procedure Score: “Tier 0” strength always indicates a final Procedure score of Non-Compliant or 0%.

Implemented:

A comparison of a system-generated population of mobile devices (including laptops and phones) against the IT asset inventory (deemed complete by the testing of another requirement statement) showed that 75 of the organization’s 100 mobile devices are encrypted. However, the organization has not documented the rationale for its failure to encrypt the remaining 25 mobile devices.

Implemented Coverage: To determine implementation coverage, identify the percentage of the evaluative elements implemented. In this example, there was no documentation of rationale or acceptance of risk for the 25 mobile devices that were not encrypted. Therefore, neither of the two evaluative elements has been implemented and coverage is 0% or “Very Low”.

Implemented Score: In this case, the final Implemented score can be determined from the coverage since the testing demonstrated the requirement statement was not being performed. “Very Low” coverage will always result in a score of Non-Compliant or 0%.

Example #2

| Scenario BUID: 1814.08d1Organizational.12 | CVID:0732.0 Appropriate fire extinguishers 1. are located throughout the facility, and 2. are no more than fifty (50) feet away from critical electrical components. Fire detectors (e.g., smoke or heat activated) are installed on and in the 3. ceilings and 4. floors. |

Evaluation

In this scenario, the evaluation for each maturity level indicates varied strength and coverage across the three scoping components (Office, DC1, and DC2).

Policy:

The Assessed Entity has a “Data Center Environmental Protections Policy”, but this policy is only applicable to data centers (not office buildings).

- The Data Center Environmental Protections Policy states, “Fire detectors must be installed in the ceilings. Clearly marked fire extinguishers must be placed throughout the facility.” There is no mention of fire detectors installed in the floors and no further mention of placement of fire extinguishers relative to critical electronics.

- Further, no policies exist addressing environmental protections in corporate offices, which is unsurprising given no fire protections were noted during an office tour.

In this example, the steps outlined in the HITRUST rubric for “varied scope on each level” will be used to determine the final score for the Policy maturity level.

Step 1) Decompose/separate scope into individual components against which the rubric can be applied.

This scenario has three individual scope components:

- Office

- DC1

- DC2

Step 2) Apply the HITRUST CSF control maturity scoring rubric to each individual scope component.

- Office

Policy Strength: In this scenario, no policy exists for the Office, so the Policy strength is “Tier 0 – No Policy”.

Policy Score: In this case, the Policy score can be determined from the strength alone. “Tier 0 – No Policy” strength always indicates a score of Non-Compliant or 0%.

- DC1

Policy Strength: In this scenario, a documented policy exists for DC1, so the Policy strength appears to be “Tier 2 – Documented Policy”.

Policy Coverage: To determine the Policy coverage, consider the percentage of evaluative elements covered for DC1. Evaluative elements 1 and 3 are covered by the policy, so the percentage of evaluative elements covered is 2/4 = 50%. This indicates “Moderate” coverage.

Policy Score: Use the strength and coverage to determine the Policy score according to the rubric. “Tier 2” strength and “Moderate” coverage indicate a score of Partially Compliant or 50%.

- DC2

Policy Strength: In this scenario, a documented policy exists for DC2, so the Policy strength appears to be “Tier 2 – Documented Policy”.

Policy Coverage: To determine the Policy coverage, consider the percentage of evaluative elements covered for DC2. Evaluative elements 1 and 3 are covered by the policy, so the percentage of evaluative elements covered is 2/4 = 50%. This indicates “Moderate” coverage.

Policy Score: Use the strength and coverage to determine the Policy score according to the rubric. “Tier 2” strength and “Moderate” coverage indicate a score of Partially Compliant or 50%.

Step 3) Calculate an average score.

(0% + 50% + 50%)/3 = 33.3%

Step 4) Refer to the “Range of Average Scores” in the legend of the HITRUST rubric to determine the rating.

Since 33.3% falls within the range of 33% – 65%, the final Policy score is Partially Compliant or 50%.

Procedure:

For the two data centers, the Assessed Entity has a written “Data Center Fire Protections Procedure” addressing operational aspects of how to install and maintain fire detectors on the ceilings and fire extinguishers throughout the facilities. The policies and procedures do not specify that fire extinguishers must be placed no more than fifty (50) feet away from critical electrical components or that fire detectors must be installed in the floors. Further, no procedures exist addressing environmental protections in corporate offices, which the External Assessor found unsurprising given no fire protections were noted.

Step 1) Decompose/separate scope into individual components against which the rubric can be applied.

This scenario has three individual scope components:

- Office

- DC1

- DC2

Step 2) Apply the HITRUST CSF control maturity scoring rubric to each individual scope component.

- Office

Procedure Strength: In this scenario, no procedure exists for the Office, so the Procedure strength is “Tier 0 – No Procedure”.

Procedure Score: In this case, the Procedure score can be determined from the strength alone. “Tier 0 – No Procedure” strength always indicates a score of Non-Compliant or 0%.

- DC1

Procedure Strength: In this scenario, a documented procedure exists for DC1, so the Procedure strength appears to be “Tier 2 – Documented Procedure”.

Procedure Coverage: To determine the Procedure coverage, consider the percentage of evaluative elements covered for DC1. Evaluative elements 1 and 3 are covered by the procedure, so the percentage of evaluative elements covered is 2/4 = 50%. This indicates “Moderate” coverage.

Procedure Score: Use the strength and coverage to determine the Procedure score according to the rubric. “Tier 2” strength and “Moderate” coverage indicate a score of Partially Compliant or 50%.

- DC2

Procedure Strength: In this scenario, a documented procedure exists for DC2, so the Procedure strength appears to be “Tier 2 – Documented Procedure”.

Procedure Coverage: To determine the Procedure coverage, consider the percentage of evaluative elements covered for DC2. Evaluative elements 1 and 3 are covered by the procedure, so the percentage of evaluative elements covered is 2/4 = 50%. This indicates “Moderate” coverage.

Procedure Score: Use the strength and coverage to determine the Procedure score according to the rubric. “Tier 2” strength and “Moderate” coverage indicate a score of Partially Compliant or 50%.

Step 3) Calculate an average score.

(0% + 50% + 50%)/3 = 33.3%

Step 4) Refer to the “Range of Average Scores” in the legend of the HITRUST rubric to determine the rating.

Because 33.3% falls within the range of 33% – 65%, the final Procedure score is Partially Compliant or 50%.

Implemented:

During the visit to each in-scope facility the External Assessor noted the following;

- Office tour, no fire extinguishers or detectors were present.

- A DC1 tour revealed that fire extinguishers are present throughout the facility and no more than fifty (50) feet away from critical electrical components and that fire detectors aren’t installed.

- A DC2 tour yielded no exceptions. All evaluative elements were met.

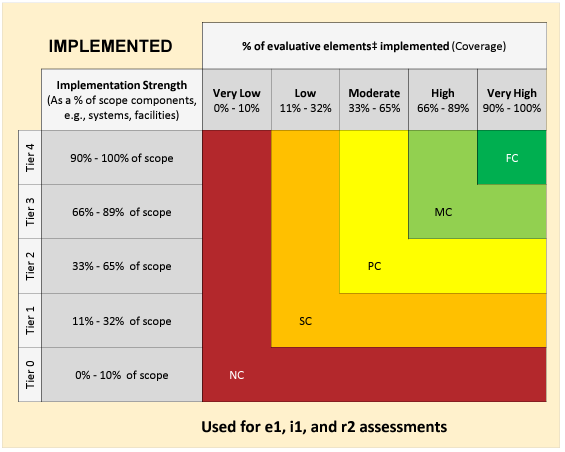

For the Implemented Maturity level, the scoring does not need to be decomposed into separate scope components since the Implemented strength takes into account the overall scope against the evaluative elements.

The Requirement statement’s Implemented strength is evaluated by considering the Assessed Entity’s control application across the assessment scope. The tier in the rubric for Implemented strength can be calculated based on the number of scope components where the control is being applied. The application of these controls can be determined utilizing corresponding observations, inspections, or walkthroughs for each scope component.

In this example, it was determined that the controls for this requirement statement were not being applied for the Office location. However, the controls were being applied at each of the Data Centers. As a result, the Implemented strength will be calculated at (0% + 100% + 100%)/3 = 67%, or Tier 3.

The maturity rating for Implemented coverage can be readily computed by leveraging a single table for coverage.

Example 2 Assessment Results for Coverage – Implemented

| Evaluative Elements | Office | DC1 | DC2 |

| Fire Extinguishers Throughout | Not Implemented | Implemented | Implemented |

| Fire Extinguishers < 50’ | Not Implemented | Implemented | Implemented |

| Fire Detectors in Ceilings | Not Implemented | Not Implemented | Implemented |

| Fire Detectors in Floors | Not Implemented | Not Implemented | Implemented |

| Coverage | 0% | 50% | 100% |

A simple average yields 50% ((0% + 50% +100%)/3), or “Moderate” for Implemented coverage.

Implementation Score: On the Implemented rubric, the intersection of “Tier 3” Strength and “Moderate” Coverage is 50% or Partially Compliant.

Example #3

| Scenario BUID: 06.09b1System.2 | CVID:0271.0 Changes to information systems (including changes to applications, databases, configurations, network devices, and operating systems and with the potential exception of automated security patches) are consistently 1. Documented, 2. Tested, and 3. Approved. The scope identified for testing included: Application #1, Operating System #1, Database #1, and Network #1. |

Evaluation

Policy:

The Assessed Entity has two separate Change Management policies:

- Change Management Policy #1 is documented for Application #1, Operating System #1, and Database #1 and states that changes must be documented, tested, and approved. (All three elements are covered)

- Change Management Policy #2 is documented for Network #1 and states that changes must be documented and approved. Since testing of changes is not explicitly stated in the policy, only 2 of 3 elements are covered.

Policy Strength: In this scenario, a documented policy exists for all four scope components, so they are all scored at “Tier 2”. Since they are scored at the same tier on the rubric, the Policy coverage scores can be averaged to determine the overall score for the Policy Maturity Level.

Policy Coverage: To determine the Policy coverage, consider the percentage of evaluative elements covered for each scope component.

Example 3 Assessment Results for Coverage – Policy

| Evaluative Elements | Application #1 | Operating System #1 | Database #1 | Network #1 |

| Changes are documented | Covered | Covered | Covered | Covered |

| Changes are tested | Covered | Covered | Covered | Not Covered |

| Changes are approved | Covered | Covered | Covered | Covered |

| Coverage | 100% | 100% | 100% | 67% |

An average of the scores indicates overall coverage is 91.75% which is “Very High” Policy coverage.

Policy Score: Use the strength and coverage to determine the final Policy score according to the rubric. “Tier 2” strength and “Very High” coverage indicate a score of Fully Compliant or 100%.

Procedure:

It was determined that a Change Management Procedure was in place stating how to document, test, and approve changes for Application #1, Operating System #1, and Database #1. There was no documented Change Management procedure in place for Network #1. However, the External Assessor determined, via walkthrough and observations of the Change Process, that the Change Management process was well-understood by the Network Administrators and that it required change documentation, testing, and approvals for all Network changes.

Procedure Strength: In this scenario, a documented procedure exists for three of the four scope components, so those three (Application #1, Operating System #1 and Database #1) are scored at “Tier 2”. However, the procedure for Network changes was not documented. Since it was observed to be performed and well-understood by those performing the procedure, it can be scored at a “Tier 1 Undocumented procedure”.

Procedure Coverage: To determine the Procedure coverage, consider the percentage of evaluative elements covered for each scope component.

Example 3 Assessment Results for Coverage – Procedure

| Evaluative Elements | Application #1 | Operating System #1 | Database #1 | Network #1 |

| Changes are documented | Covered | Covered | Covered | Covered |

| Changes are tested | Covered | Covered | Covered | Covered |

| Changes are approved | Covered | Covered | Covered | Covered |

| Coverage | 100% | 100% | 100% | 100% |

In this instance, an alternate method will be utilized for calculating the rubric score since there were differing strength scores. This calculation will also show how weighting may be utilized in scoring.

Since Application #1, Operating System #1, and Database #1 all follow the same Change Management Procedure, these will have the same score. The Change Management process for those three applications has a score of 100% or Fully Compliant (“Tier 2” Strength and “Very High” Coverage).

The Change Management Process for Network #1 is scored at 25% or Somewhat Compliant (“Tier 1” strength and “Very High” coverage).

Procedure Score: In this example, there are rubric scores of Fully Compliant and Somewhat Compliant. Since 3 of the 4 scope components follow the change management process scored at Fully Compliant, a weight of 75% can be used for that score. The Network change management process of Somewhat Compliant will be weighted at 25%. After combining these scores, we reach a score of (.75)100 + (.25)25 = 81.25%, or Mostly Compliant.

Implemented:

During testing of the Change Management process, the External Assessor noted that:

For Change Management Process #1 (Application #1, Operating System #1, and Database #1): Ten (10) Changes were sampled, it was determined that 5 of 10 were properly documented, 3 of 10 were properly tested, and 3 of 10 were approved.

For Change Management Process #2 (Network #1): Ten (10) Changes were sampled, it was determined that 2 of 10 were properly documented, 1 of 10 were properly tested, and 1 of 10 were approved.

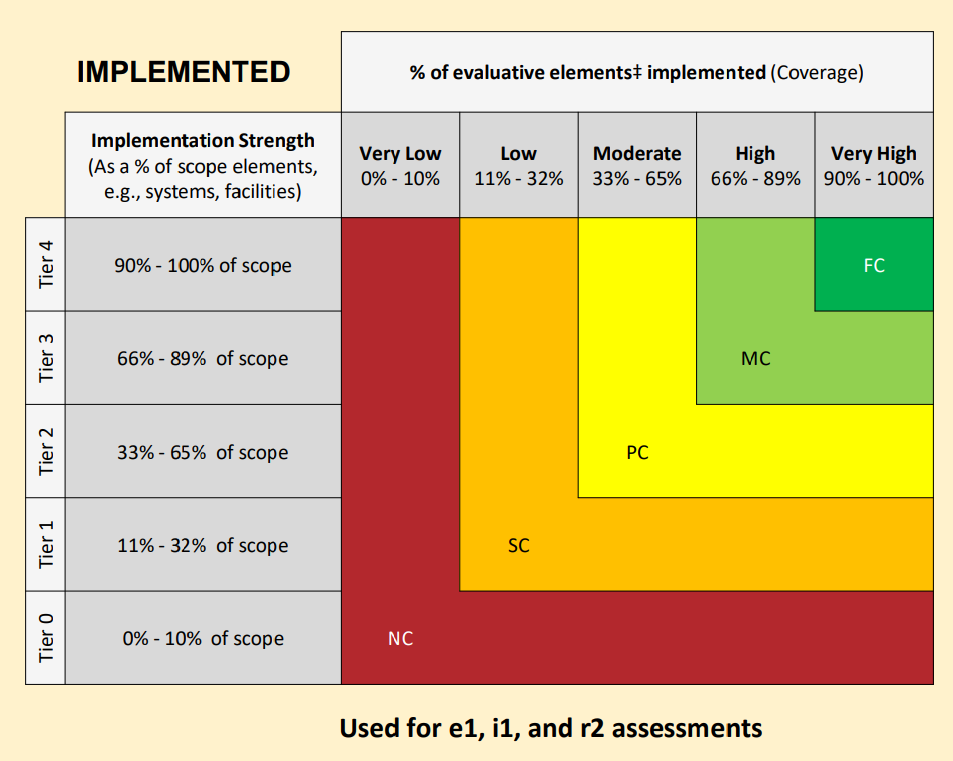

While the same scoring approach for the Implemented level can be taken as in example #2, this example will highlight an alternate acceptable scoring approach (which may be easier for those requirements) by combining scope components and evaluative elements into one table.

In this example, the testing results for each evaluative element will be entered into a table with the scope components listed on the Implementation Strength axis and the evaluative elements on the Implementation Coverage axis.

Example 3 Assessment Results for Coverage – Implemented

| Scope Components | Changes are Documented | Changes are Tested | Changes are Approved |

| Application #1 | 50% | 30% | 30% |

| Operating System #1 | 50% | 30% | 30% |

| Database #1 | 50% | 30% | 30% |

| Network #1 | 20% | 10% | 10% |

Implemented Score: The above table combines the strength and coverage to determine the final Implemented score according to the rubric. If the above scores are averaged (370%/12), a score of 31% is achieved, which corresponds to an overall rating of Somewhat Compliant.